In a previous project, we worked on understanding how an individual’s emotional state relates to and is affected by the environment surrounding them. Using these findings, now we aim to build an attentive artifact that mediates the spatial experience of an individual based on their emotional state.

In the process of building this artifact, we will be working in three areas: emotion recognition, spatial representation of emotions, spatial interactions based on emotions.

Emotion Recognition

We will build a real-time multimodal emotion recognition system. We are currently at the stage of reviewing literature on such systems. Based on this review, and influenced by our decisions on emotional representation and spatial interactions, we will identify the modalities and then start building!

This part of the project will possibly include image processing, signal processing, machine learning and use of devices such as biosensors, EEG, Kinect 2.

Emotional Representation

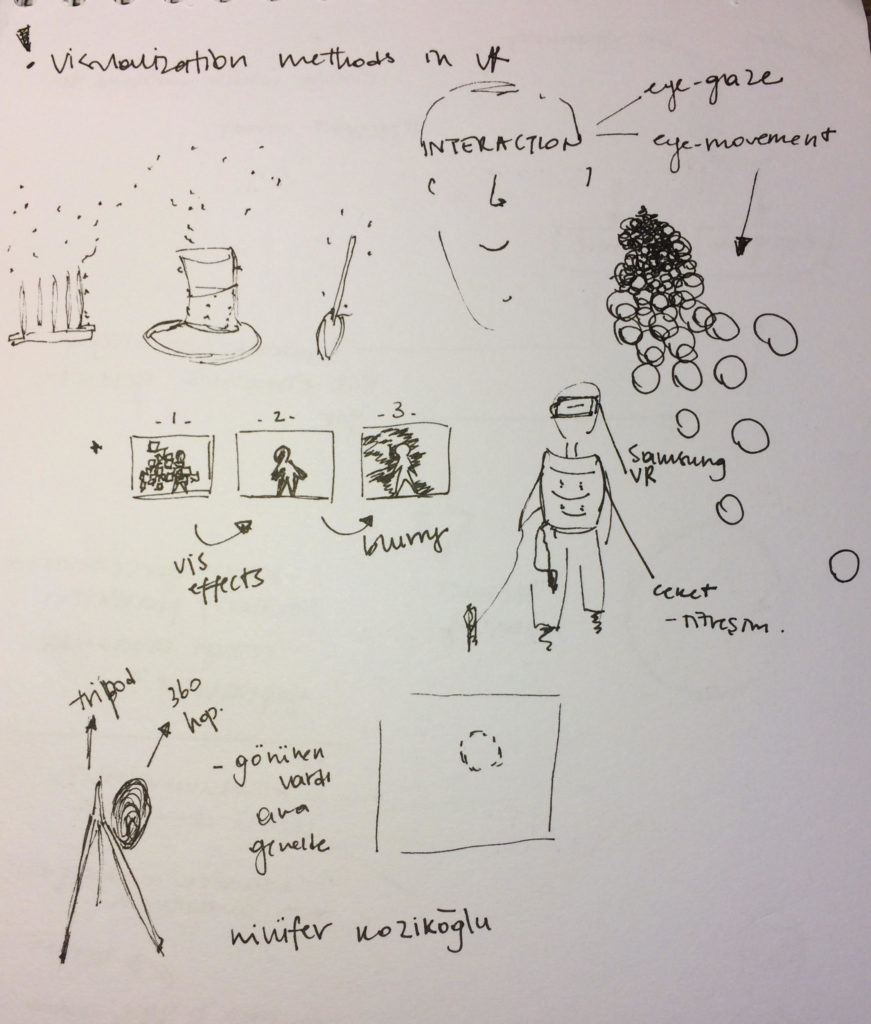

For this part of the project we will be exploring 3d representation methods that can be used to represent emotions spatially. We will model the spatial emotion representations and integrate them into an AR/VR environment, which is how we will be mediating the spatial and emotional experience of the individual. We are currently reviewing literature and working on combining this information with the outcome from our previous work.

This part of the project will include working with 3d visualization methods, 3d modelling – probably with Blender, and integration of these models into AR using Unity, Vuforia and Hololens.

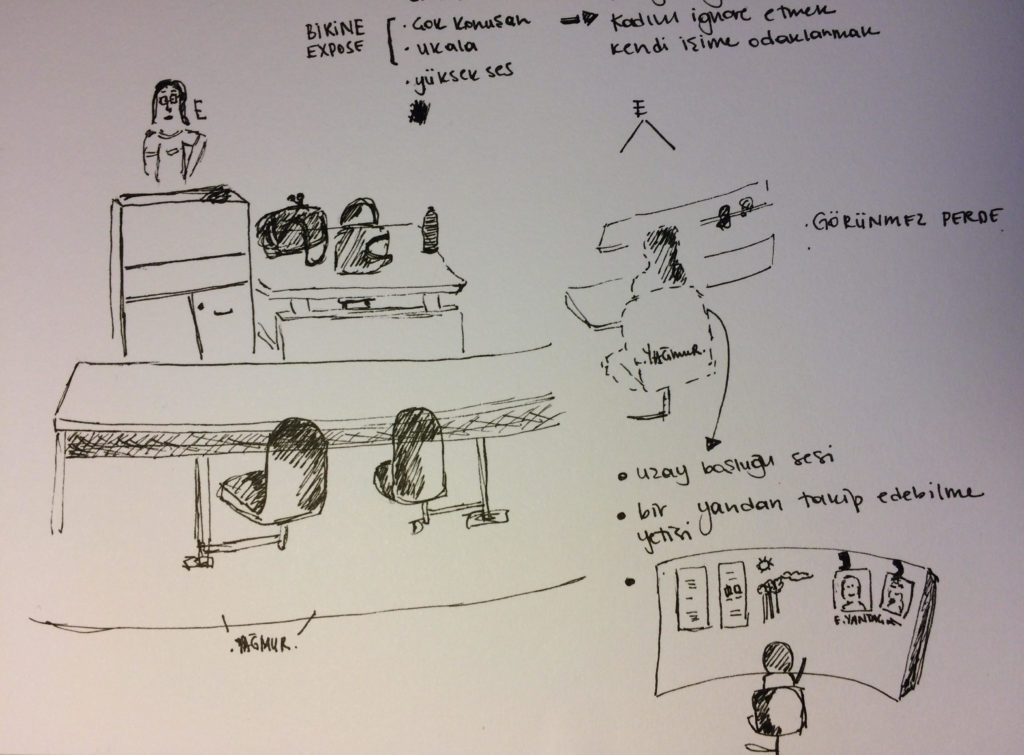

Spatial Interactions

In this part, we will work on defining interactions between an individual and the surrounding space. More specifically, the interactions and the space will be examined in the context of mediation of the emotional state. These interactions will connect the emotional state of the user to the spatial representation in the AR simulation.

This part of the project will include working in Unity, Vuforia and Hololens.